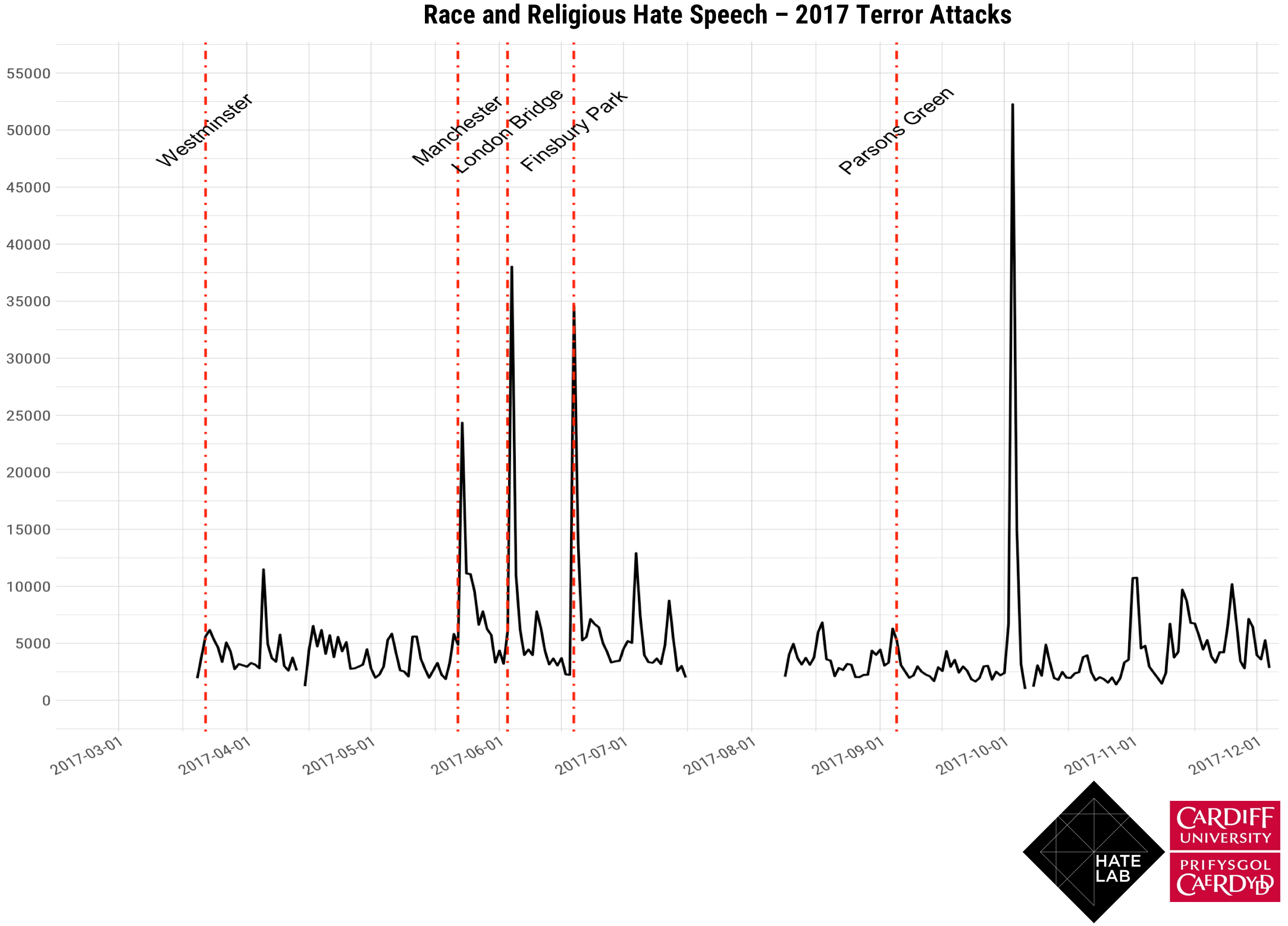

This chart shows race and religious hate speech posted globally on Twitter throughout 2017. The chart represents both moderate and extreme hate speech, with original tweets and retweets combined. Discernible spikes in hate speech are evident that coincide with key events during the year, notably the UK terror attacks in Westminster, Manchester, London Bridge and Finsbury Park. The large spike in October is related to the mass shooting in Las Vegas, US, where for a period of time assumptions were being made on Twitter that the attack was an extremist Islamic terrorist incident, fuelled in part by a false claim by ISIS that the shooter was acting on their behalf.

‘Trigger’ events are incidents that motivate the production and spread of hate both on and offline. Research has shown that hate crime and speech tend to increase dramatically in the aftermath of certain antecedent events, such as terror attacks and controversial political votes. Following trigger events, it is often social media users who are first to publish a reaction (Williams and Burnap 2016).

HateLab Analysis

The Enablers and Inhibiters of Online Hate Speech

Statistical modelling of past terrorist events showed, compared to all other types of online content, online hate speech was least likely to be retweeted in volume and to survive for long periods of time, supporting the ‘half-life’ hypothesis (Williams and Burnap 2016). Where hate speech is re-tweeted following an event, there is evidence to show this activity emanates from a core group of like-minded individuals who seek out each other’s messages. These Twitter users act like an ‘echo chamber’, where grossly offensive hateful messages reverberate around members, but rarely spread widely beyond them.

Tweets from verified, media, government and police accounts gain significant traction during and in the aftermath of terrorist events. As these tweeters are least likely to produce and propagate hate speech, and are more likely to engage in the spread of positive messages following ‘trigger’ events, they prove to be effective vehicles for stemming negative content through the production of counter-hate-speech. In particular, the dominance of traditional media outlets on Twitter, such as broadsheet and TV news, leads to the conclusion that these channels still represent a valuable pipeline for calls to reason and calm following possibly fractious events of national interest. However, where newspaper headlines include divisive content, HateLab analysis suggests these can increase the production of online hate speech.

Fake Accounts and Bots and the Production of Hate

Fake Twitter accounts purportedly sponsored by overseas state actors spread fake news and promoted xenophobic messages following the 2017 terror attacks in the UK, that had the potential consequence of raising tensions between groups. In the immediate wake of the Manchester and London Bridge terror attacks, fake accounts linked to Russia sent racially divisive messages. These messages attempted to ignite and ride the wave of anti-Muslim sentiment and public fear. A key tactic used by these fake accounts was to attempt to engage far-right accounts using hashtags and appealing profiles, thereby increasing the reach of their messages if a retweet was made. In the minutes following the Westminster terrorist attack, a suspected Russian fake social media account tweeted fake news about a woman in a headscarf apparently walking past and ignoring a victim. This was retweeted thousands of times by far-right Twitter accounts with the hashtag ‘#BanIslam’. The additional challenge presented by these fake accounts is that they are unlikely to be susceptible to counter-speech and traditional policing responses. It therefore falls upon social media companies to detect and remove such accounts as early as possible in order to stem the production and spread of divisive and hateful content.

The Characteristics of Fake Accounts and Bots

In October 2018 Twitter published over 10 million tweets from around 4,600 Russian and Iranian-linked fake/bot accounts. Bots are automated accounts that are programmed to retweet and post content for various reasons. Fake accounts are semi-automated, meaning they are routinely controlled by a human or group of humans, allowing for more complex interaction with other users, and for more nuanced messages in reaction to unfolding events. While not all bots and fake accounts are problematic (some retweet and post useful content) many have been created for more subversive reasons, such as influencing voter choice in the run up to elections, and spreading divisive content following national events. Bots can sometimes be detected by their characteristics that distinguish them from human users. These characteristics include a high frequency of retweets/tweets (e.g. over 50 a day), activity at regular intervals (e.g. every fifteen minutes), content that contains mainly retweets, the ratio of activity from a mobile device versus a desktop device, accounts that follow many users, but have a low number of followers, and partial or unpopulated user details (e.g. photo, profile description, location etc.). Fake accounts are harder to detect, given they are semi-controlled by humans, but telltale signs include accounts less that 6 months old, profile photos that can be found elsewhere on the internet that clearly do not belong to the account, and a suspicious number of followers.